Our audio fingerprint, along with the post-transmission EPG data we receive, automates music reporting for TV programs.

We’re sensitive beings. We’re often reaching for the tissues while listening to our favourite sad song or watching a heartwarming movie scene accompanied by a melancholy number. It’s the music in particular that makes us feel. Often inducing emotions that are registered in our cerebrum, releasing hormones to the ocular area, making the tears inevitably stream down our cheeks. We can’t help it, it’s science.

Over the last decade the increase in daily consumption of audiovisual productions has brought about an increase in music synchronisation – and music is our thing, we follow her wherever she goes. In 2017, the earnings from audiovisual repertoire increased by 6.8% worldwide, reaching 50 million US dollars. We believe the key to the fair distribution of royalties is generating returns accurately and transparently, and our collaborative digitalisation of cue-sheets does exactly that.

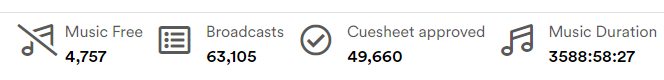

As part of the network of technologies which make up what we call the BMAT Operating System, our audio fingerprint, along with the post-transmission EPG data we receive, automates the generation of music reports for TV programs. It detects music and identifies it alongside our database. We then generate the form — cue-sheet— which enables a composer to receive their royalties for the use of their music on-screen.

Whether they’re the main tunes that make us recognise a series, or the background music that sneaks up on us only to become the soundtrack to us wiping away our tears, these are valuable components which deserve recognition.

Our tool generates cue-sheets from the perspective of both the broadcaster and music producer and publisher, who then have the opportunity to check and validate the reports, adding any necessary extra information before sending the reports on to the collecting societies.

Our automatic identification also prevents the chance of human error such as a misspelled title or artist — errors which can be amplified when the report is delivered to be processed, cross-referenced or has its metadata extracted within their own database before later distributing royalties. Our titles use the metadata provided by the content owners.

Once approved by the societies, the reports make themselves at home in our BMAT database, ready to be automatically reused every time the same program is reissued – no matter where in the world.

Although audiovisual producers usually know the name of the work and the contributors for their in-house productions, they can struggle to know the creators of other music used. That’s why our tool is useful both to them – they can easily deliver complete reports, and to the CMO – they don’t have to look for this data, just check the report against their own database.

After an EU-wide bidding process, we began providing German national broadcaster ARD-ZDF-DLR with identification of all music played on their TV and radio channels. Our audio fingerprinting enables them to automatically generate music royalty reports. Our platform is fed by their video streams, audio streams, and program data, fueling the identifications needed for generating auditable music usage reports. “We are glad to have found a service provider with BMAT, whose technology counts to the absolute top in this area.” Ulrich Geiger, Project Management ARD / ZDF.

Automating cue-sheet generation in such a collaborative validation fashion reduces the workload for producers and improves metadata consistency, ultimately benefiting the deserving creators of those soundtracks we know, love, laugh and cry to.

Having stood the test of time in Germany, our music reporting tool for broadcasters is now fluent in many languages and ready to be released into the wild. With our tool, Production Houses have control over their own productions’ metadata, while our audio fingerprinting technology within this tool generates accurate reports.

Get in touch with us or keep reading to find out more.

Written by Kelly, Head of Content Strategy

Latest articles

November 19, 2025

Inside BMAT DSP Processing: How SIAE turned operational clarity into stronger licences and faster distributions

Some relationships last because they work, others last because they grow. For more than 10 years, we believe the partnership between SIAE and us has done both. Across products and people, w [...]

October 9, 2025

KODA and BMAT Announce Landmark Global Partnership for Music Rights and Royalties Management

BMAT, a global leader in music technology and rights and royalties data, is proud to announce a new partnership with KODA, the collecting society for songwriters, composers and music publis [...]

March 26, 2025

Inside ReSol: An interview with SACEM on rethinking how digital claiming conflicts are resolved

Much like family gatherings, the intricate world of digital music rights is no stranger to conflict. With frequent occurrences like overlapping ownership claims, metadata inaccuracies, lice [...]