Our music identification technology has taken us on many journeys of exploration. Here’s just one that we wanted to share with you.

Years ago, our love of music identification lead us to investigate whether language and music were the same things.

In the process, we found out that the universe of tunes behaves rather like a social network, and that popular western music could actually be running out of unique melodies.

Men sang long before they spoke. Apparently, our innate fascination for repetition made our ancestors first mimic the sounds of nature (a beast’s roar, a bird’s whistle, a baby’s cry). They created musical forms of expression long before they could modulate voice into words. It’s unclear at which point the creatures we descended from realised such singing could really communicate ideas and feelings. Either way, this was one giant leap for the creation of language.

Moving onto more contemporary and less hairy times (for some of us) 15 years ago, a bunch of us were at the MTG researching music and audio technologies. Those days, we were easily excitable in almost every sense. Especially when exploring disciplines unknown to us.

Some Complex Networks scientific publications had called our attention. They stated music and language were the same things. Because we were quite good at playing instruments but not as good at talking to people, we thought there was no way this could be true. The music-language comparison couldn’t be right.

So, we decided to take the matter into our own hands.

Ha! We found that music, opposed to language, shared properties with other complex networks

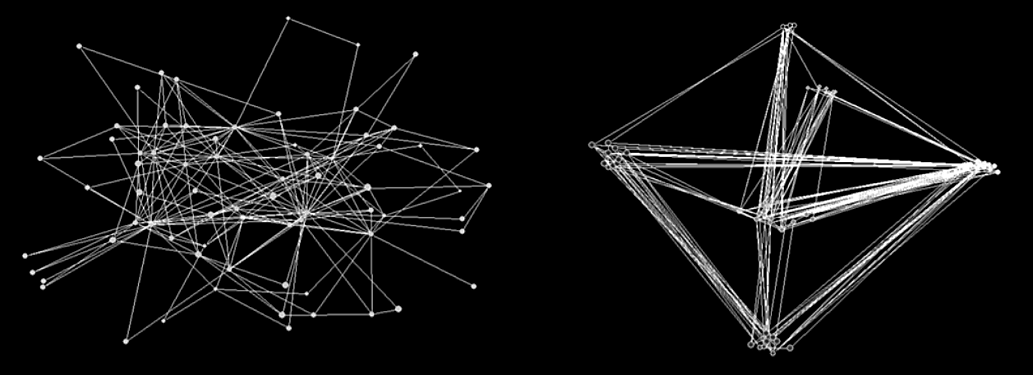

We built different types of Complex Networks. For music, we took more than 10,000 western contemporary music scores covering a broad range of music styles and defined three different lexicons – note-note, note-duration, interval. For the note-note network, we considered notes as interacting units of a Complex Network. Accordingly, notes were nodes and a link existed between notes if they co-occurred in a music piece. The connection of this set of notes allowed constructing an unlimited number of melodies of which the collection of melodies of our corpus was a small sample. For the note-duration and the interval Complex Networks, we did pretty much the same.

Node and link representation for note-note, note-duration, and intervals.

In parallel, we built a set of Complex Networks for language, which resulted from the interaction of tri-phonemes, syllables and words in El Quijote by Cervantes. Similar to what we did with music, words, syllables and tri-phonemes were nodes and links were created at co-occurrence.

The note-duration Complex Network obtained from Michael Jackson’s Billie Jean is graphed by default (left) and manually arranging the nodes corresponding to the same note (right).

We looked at results. The music networks displayed complex patterns different from the language. Ha! We found that music, as opposed to language, shared properties with other complex networks, namely the small world phenomenon as well as a scale-free degree distribution. Just like social networks, where a friend of someone with a lot of friends will most probably have a lot of friends his own, the properties of the musical networks told us, whatever lexicon we choose to define the nodes, that most frequent melodic atoms belong to a very crowded and interconnected community while rare links belong to the ghettos.

If the commercial music space is so confined, can we conclude that we are running out of popular melodies?

While proving the music language identity wrong, we had accidentally proven that, at least for western commercial popular songs, uncommon melodies very rarely interact with the trendy. We also know that music typically sticks to dodecaphonic temperate scale and applies strict constraints over key, tempo and chroma to fit what people’s ears want to hear.

If the commercial music space is so confined, can we conclude that we are running out of popular melodies? Could it be that melodies cannibalise each other? And is it possible that any melody or music identification technology – designed to match works and not sound recordings – is doomed to generate ‘false’ positives? Yes, yes, and yes. A bit more detailed replies in part 2.

Written by Àlex, CEO

See Part 2 – Running Out of Melodies

Latest articles

November 19, 2025

Inside BMAT DSP Processing: How SIAE turned operational clarity into stronger licences and faster distributions

Some relationships last because they work, others last because they grow. For more than 10 years, we believe the partnership between SIAE and us has done both. Across products and people, w [...]

October 9, 2025

KODA and BMAT Announce Landmark Global Partnership for Music Rights and Royalties Management

BMAT, a global leader in music technology and rights and royalties data, is proud to announce a new partnership with KODA, the collecting society for songwriters, composers and music publis [...]

March 26, 2025

Inside ReSol: An interview with SACEM on rethinking how digital claiming conflicts are resolved

Much like family gatherings, the intricate world of digital music rights is no stranger to conflict. With frequent occurrences like overlapping ownership claims, metadata inaccuracies, lice [...]